In late 2004, a University of Manchester physics professor known as Andrei Geim isolated graphene from graphite, and it was the world’s first two-dimensional material to ever be discovered. Although theorized for decades, it was widely assumed that isolating graphene—a single layer of carbon atoms arranged in a hexagonal lattice—was impossible.

Winning Geim and his team the 2010 Nobel Prize in Physics, this discovery has been hailed as one of this century’s most formidable scientific events.

As a disruptive technology, graphene could replace many existing materials and technologies, and it has potential applications to virtually all areas of life. The sheer breadth of potential industries, processes, and products where graphene could be used can be attributed to its amazing properties:

- It is lightweight and flexible, despite being much stronger than steel.

- It can conduct electricity and heat.

- It was the world’s first 2D material to be discovered.

- In addition to being 1 million times smaller than a human hair, it is transparent.

Of all the places where graphene’s use could have a significant impact, none are quite as interesting and exciting as electronics, particularly computing.

What Could Graphene Mean for Electronics?

In theory, graphene could create the types of next-generation technologies that are largely reserved for sci-fi films. Think unbreakable phones, bendable laptops, super-fast transistors, and semiconductors. Additionally, because it is very thin, completely flexible, and conductive, it is the ideal material for use in electronics, and by being a single atom in thickness, it meets the need for future miniaturisation of said electronics.

Graphene can be used to improve glass touchscreens, making them impossible to crack and smash, and if that wasn’t interesting enough, it is also theoretically possible to make flexible electronics that you can roll up like a newspaper or wear around your wrist.

If shatterproof touchscreens and bendable electronics weren’t enough, University of Manchester researchers have already used graphene to produce the world’s smallest transistor, paving the way for miniature technology. This is one of the greatest challenges currently facing the electronics industry, particularly manufacturers of specialist health products.

Although great strides have been made with graphene’s technological applications, particularly to consumer electronics, there is one area which is currently lagging: computing.

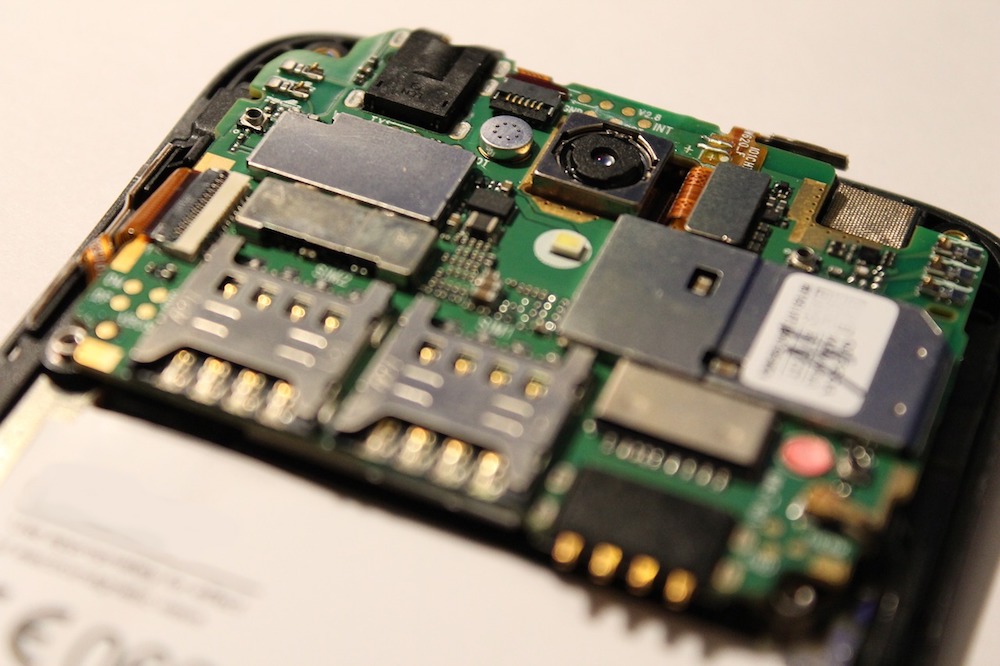

Image licensed by Bigstock.

The Problem Facing Graphene’s Use in Computing

Computing is, by far, where this humble lattice of carbon atoms could have the most profound impact, and it is, therefore, Sod’s law that this is where scientists and engineers are facing the biggest problems.

Modern computing relies heavily on the use of silicon, a semiconductor that facilitated the electronic revolution and computerization of the 21st century. Simply put, silicon underpins virtually everything in our digital world; modern life depends on silicon-based integrated circuits that can be switched on and off.

For years, silicon chips which house microscopic transistors have been getting smaller and smaller—they are what have created the digital age as we know it—but with the onset of artificial intelligence, the Internet of Things, robotics, and other intensive computing and supercomputing efforts, silicon is reaching its peak performance, particularly in applications which require faster speed, light detection, and reduced latency.

Silicon degrades over time, it stops working when its lattice is shrunk to move transistors closer together, and its performance drops at high temperatures. Given that modern integrated circuits are worked harder, need to be smaller, and use billions of transistors that generate lots of heat, silicon’s limits become clear. For context, a modern smartphone has over 200 billion transistors.

Although it may be somewhat premature to discuss silicon’s decline—it is not expected to fizzle out for a couple more decades—pondering on possible successors is not.

Where Does Graphene Come Into This?

Perhaps the most revered property touched upon in Geim’s 2004 paper was its electronic mobility and how it could be used to increase computing power by a factor of a hundred or more.

This assertion shook the semiconductor industry, which was, at the time, struggling with Moore’s Law—the assertion that the density and thus effectiveness of computer chips would double every two years—and engineers had, for over five decades, kept pace by cramming in more and more transistors onto chips with silicon wafers the size of an adult fingernail.

Because of the problems discussed above, this is fast becoming unsustainable and, therefore, the time for a new material that can replace silicon is now. Graphene could be the answer to this, but there is a problem.

Semiconductors like silicon are defined as such because they can be turned on and off when in the presence of an electric field; when used in logic chips found in computer circuits, this generates binary code—the zeros and ones that speak to computers—and graphene cannot do this because it is a semi-metal zero bandgap material.

Since Geim’s 2004 discovery of graphene, engineers have worked tirelessly to try and open a bandgap, the electrical property which makes semiconductors work as switches. Fourteen years on, however, nobody has succeeded in doing this in a commercially viable way, and it has been speculated that, in order to do so, graphene would need to be changed to such a degree that it would no longer be graphene.

Simply put, the biggest obstacle to graphene’s use in computing is the fact that it can’t be turned off. Whilst it’s all well and good using a material with super-fast electrical movement, if you can’t turn it off, it’s going to consume power constantly.

Image courtesy of Pixabay.

Graphene’s Future in Computing

So, what does all this mean for graphene’s future in computing?

Hundreds of millions have been spent on research in this area, and the consensus is that a solution is out there—and that it is only a matter of time until engineers succeed in either open up a semiconducting bandgap in graphene or come up with a method to integrate it with silicon in a way that will eliminate the above problems.

Let’s also not forget that graphene is not the be-all and end-all when it comes to the future of computing. Although silicon does have a maximum utility and graphene would be the most ideal successor because of its properties, there are other solutions, such as quantum computing and next-generation semiconductors like gallium nitride.

Just like graphene, these are faster and more efficient than silicon. What’s more, they are already being used to help create the next generation of smartphones, which will use 5G and 6G networking.

Graphene—whilst in theory a brilliant material with unlimited potential—owes a lot of its success to disproportionate hype; it is an electronically useful material in an age where we are obsessed with electronic devices. Whilst this hype has attracted lots of investment, graphene has, at present, very little to show for it so far as computing is concerned, and engineers should be aware of this.